Four levels of validation - A simple start

What do you mean my model isn't valid if my plot matches the data?

Hello and welcome back,

you'll have likely asked or heard the question: "But is the model valid?" come up at some point in life. For me, who follows the vast literature on Agent Based Modelling in economics (see here for an intro), this is almost the token question or critique that is thrown about.

The question really is, what does it mean for a model to be valid? How valid can it be, as by nature, a model is a simplification of the complexity of the real world?

What is validation?

My starting point is Leigh Tesfatsion's collection of articles, that has remained relevant to this day. Particularly, the reference to "Validating Computational Models" by Kathleen Carley (1996).

In short, having a valid model means having the "right" model in the sense that the abstract model that was chosen accurately reflects the real-world phenomena that is being modelled. This is still rather general, and leaves open a huge range of questions about what it means to accurately reflect a real-world phenomena. A more intriguing formulation of that question might be: How well does the model structure represent the data generating process (DGP) underlying societal reality? with the restriction that the DGP cannot be directly observed. For instance we do not observe every action of every person at every time, but rather observe aggregated data. To actually answer that question, we need to consider our model from various perspectives.

Different levels of validation?

Tesfatsion defines validation along 4 lines, but they are somewhat unsatisfying as the questions for each section reduce only the scope of the issues we are considering: inputs to models, behavioural assumptions in models, and then output of the model.

1. Input Validation

Are the exogenous inputs for the model empirically meaningful and appropriate for the purpose at hand? (Exogenous model inputs include: initial state conditions, functional forms, random shock realizations, data-based parameter estimates, and/or parameter values imported from other studies)

2. Process Validation

How well do the physical, biological, institutional, and social processes represented within the model reflect real-world aspects important for the purpose at hand?

Are all process specifications consistent with essential scaffolding constraints, such as physical laws, stock-flow relationships, and accounting identities?

3. Descriptive Output Validation

How well are model-generated outputs able to capture the salient features of the sample data used for model identification? (in-sample fitting)

4. Predictive Output Validation

How well are model-generated outputs able to forecast distributions, or distribution moments, for sample data withheld from model identification or for new data acquired at a later time? (out-of-sample forecasting)

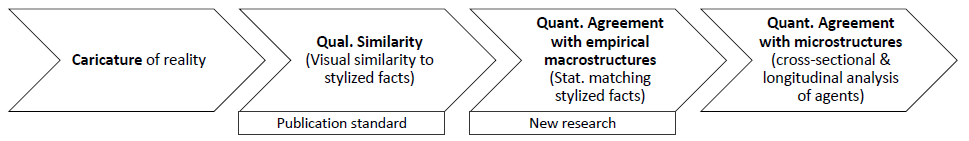

Axtell & Epstein (1994) - Levels of Empirical Validation

In order to actually be able to attribute a "category" or "level" of validation that distinguishes between different models I find it more instructive to consider the four levels of validation that were proposed by Axtell & Epstein in 1994.

In essence there are several levels in which we can take the model to the data in ABMs, all the way from not at all (left hand side) to matching both macro and micro structures quantitatively. This particular formulation also does not propose that we need to match a singular timeseries exactly, but that we meet the stylised facts exactly and do so at many levels.

What is missing in the simple replication of stylized facts is an answer as to whether the dynamics that generated these stylized facts (I am thinking here primarily about the non-timeseries facts such as the distribution of firm sizes) are actually captured by the model. This is a particular thorny question in general → how can we be sure we capture the dynamics of the real world, at least partially? Particularly if we can only observe the developments of the time series at aggregate levels and these time-series are based on the needs of existing models.

Time series prediction is a laudable goal, and my personal hope is that it can be a great validation tool as well as making ABM a "forecasting tool" for practitioners. However, in simulation studies with ABMs we would typically be in the position that we have a distribution of realisations that can have a large variance. The question then is - how do I compare the distribution of possible outcomes from an ABM to the simple timeseries we observe in reality? (This is similar to the scenario-generator argument for ABMs). In this regard, I think that precise time-series prediction might be a somewhat erroneous exercise as.

Should we even bother with validation?

The question is - is an empirically validated model a better model? There are two levels to this question. In the first place, it really depends on which model you are considering. Several categories of models exit in economics (upcoming post), and not all of them should be empirically matched with the data. For instance, small toy models are not designed to be fit to the data but rather to highlight a particular mechanic. Most macro-economic ABM models are however designed to provide a representation of reality proper, whether that is the large set of firms or the large set of all agents in the economy. In this regard, comparing them to data

Overall, I would say that if anything an empirical validation check can be a sanity check - am I going in totally the wrong direction? And with that a check of some of the assumptions of the model. It is also a good way of assessing

The real issue is that an ideal verification would happen at the micro-scale, but large-scale micro-data across both a large cross-section of the population and a sufficiently long period of time essentially doesn't exist or isn't available for researchers to validate their models.

A note: Verification is ... NOT ... Validation

Verification of a model is typically closer to what Tesfatsion deems "Process validation". A model, particularly those that are used computationally, should be considered verified if the code that has been built matches the theoretical model. In other words, whether your code is correct.

We can observe that a model can be verified but not valid, though it is not necessarily the case that a valid model is verified. We are human after all, and mistakes happen.

At this point I should mention that I share the belief of Meissner: The code is the model - while, on a theoretical level the model may be expressed in natural language and equation form, it is important to also include the source code. Decisions in the implementation, such as the order in which agents are called can have a strong effect on the actual simulation outcomes.